Kicking off a Project

Looking at the infamous project management triangle we identify three variables. When starting a project, these trigger three questions:

- What would we like to deliver?

- When would we like to deliver it?

- Who will deliver it?

When asked to come up with values for these items I see the following activities occur:

- Scope is decided

- A team is decided

- A set of estimates are formulated

- A plan is built based on the estimates, dependencies and a buffer of some sort

At this point, the plan is put forth and a fourth question is asked. How confident are you that this is right? The standard response is somewhere between 70 and 90 percent. Anything less and we’d be openly admitting that we’re willing to take a pretty big risk on if we can actually deliver, right? So where does this number actually come from?

There are a few ways I’ve seen the confidence value formulated. Most commonly I see a ‘feel’ provided by project manager based on information provided by a few subject matter experts. Some might take a look at a risk register as well, and I’ve even seen an entirely separate set of estimates generated for the same work by a different team. In the end a lot of the time the primary contributor to our level of comfort is the amount of ‘fat’ or contingency we’ve built in.

I’d like to ask if this is the best we can do?

What are we really looking at?

To answer this question we need to understand what we are trying to derive, and what from. When setting out to build any kind of product we’re looking to exploit an opportunity or reduce a cost. These activities in most cases will also be time sensitive, leading us to an ideal delivery date. This is our first piece of data.

Our second piece of data is our estimate. This provides us with a picture of what we think it will take to get the scope we’ve defined done. These should be relative estimates, and I usually encourage a planning poker approach for a small number of items. Anything more than around 30 and I’d suggest looking at affinity estimating. It’s worked for me and is a great way to get through a large number of items. I’ve used it to estimate ~400 items at a time in around 2 hours – though we padded that out with a couple of breaks to keep us sane.

The third and final required piece of data is also the most problematic to obtain. It’s a rate of delivery over time. Put differently, historically how fast do we progress through our work. I don’t see this value stored in a lot of organisations, and it’s one of the first things we look to build as there’s a lot of value that can be derived from it both in current and predictive terms. You can track it in a few ways – either as a set of units completed in a period e.g. points delivered per iteration, or as an average elapsed time between two points e.g. time from ready for development to production. Either is fine, just be consistent and ensure you record it. If you don’t have the data then an estimated value will do. Just be sure to record your delivery rate once you start and adjust your view based on this new data.

Confidence Measure

Now we have identified our data how do we understand how likely our ideal outcome actually is? With a historical view of how quickly we delivery work we can calculate a mean rate of delivery.

Note: I’ll be using excel style formulas from here on in. The function calls should be valid if you replace the names with cell references or numbers.

MEAN RATE = SUM OF DELIVERY RATES / COUNT OF MEASUREMENTS

This gives us an average rate per measurement period. I am currently using a daily rate of points, as it eases the extrapolation to dates in later calculations. The next calculation is to work out the standard deviation of the delivery rate.

Note: We are making an assumption that the rate of delivery is normally distributed. This may not be the case, but we have the historical data to better understand how this might be incorrect.

STD. DEV = STDEV.P(LIST OF DELIVERY RATES MEASUREMENTS PERIOD)

At this point we can actually start to calculate what our chances of delivering at different rates is. For example, a mean rate of 8 with a standard deviation of 1.5 gives us a chance of hitting a 15 unit rate of delivery of about 0%, if we were to say 11 then we’d be looking at around 2.28% chance.

To make this useful we next need to know what our required rate of delivery to achieve our target date would be. To calculate this we use the following:

RATE REQUIRED = SUM OF SCOPE / PERIODS UNTIL DATE

We can then understand the probability of reaching that rate by using the data we’ve now got from our calculations as follows:

PROBABILITY = 1 – NORM.DIST(DAILY RATE REQUIRED,MEAN DELIVERY RATE,DELIVERY RATE STD DEVIATION,TRUE)

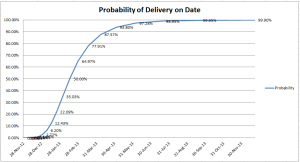

Mapping this curve we get something like the following:

Delivery Probability

What doesn’t this graph tell me?

This graph is a simple probability graph based on historical delivery rate and estimated scope. These in themselves have flaws, as estimates are normally out by factors and scope changes.

What it does do is give us a much better idea of if our dates are even viable. For example you can see from the graph that for the applicable figures the chance of reaching a target date in early January would be pretty low. Especially once we start considering other factors such as reduced availability over the Christmas period.

What benefit does this provide?

The use of data to discover our probability of delivery on a specific date allows us to make a more educated decision on if the risk we are taking to make a specific date is of enough value. This value calculation is influenced by our driver for the work in the first place and it’s attributes such as cost of delay. Armed with this data we can start deciding on influence strategies and even weighing up other opportunities more effectively, rather than relying on our intuition to drive our delivery decisions.